Phase 1: Foundations and the “Second Source” Era (1969-1990)

In its early years, AMD operated primarily as a licensed manufacturer for other companies’ designs, most notably as a backup supplier for Intel.

- 1969: Founded by Jerry Sanders and seven colleagues from Fairchild Semiconductor.

- 1982: Signed a technology exchange agreement with Intel to become a “second source” manufacturer of 8086 and 8088 processors for the IBM PC.

- 1986: Intel terminated the agreement, leading to a massive legal battle that lasted nearly a decade.

Core Technology: Logic chips, SRAM, and x86 Second Source Technology (8086, 80286) licensed from Intel.

Revenue Level:

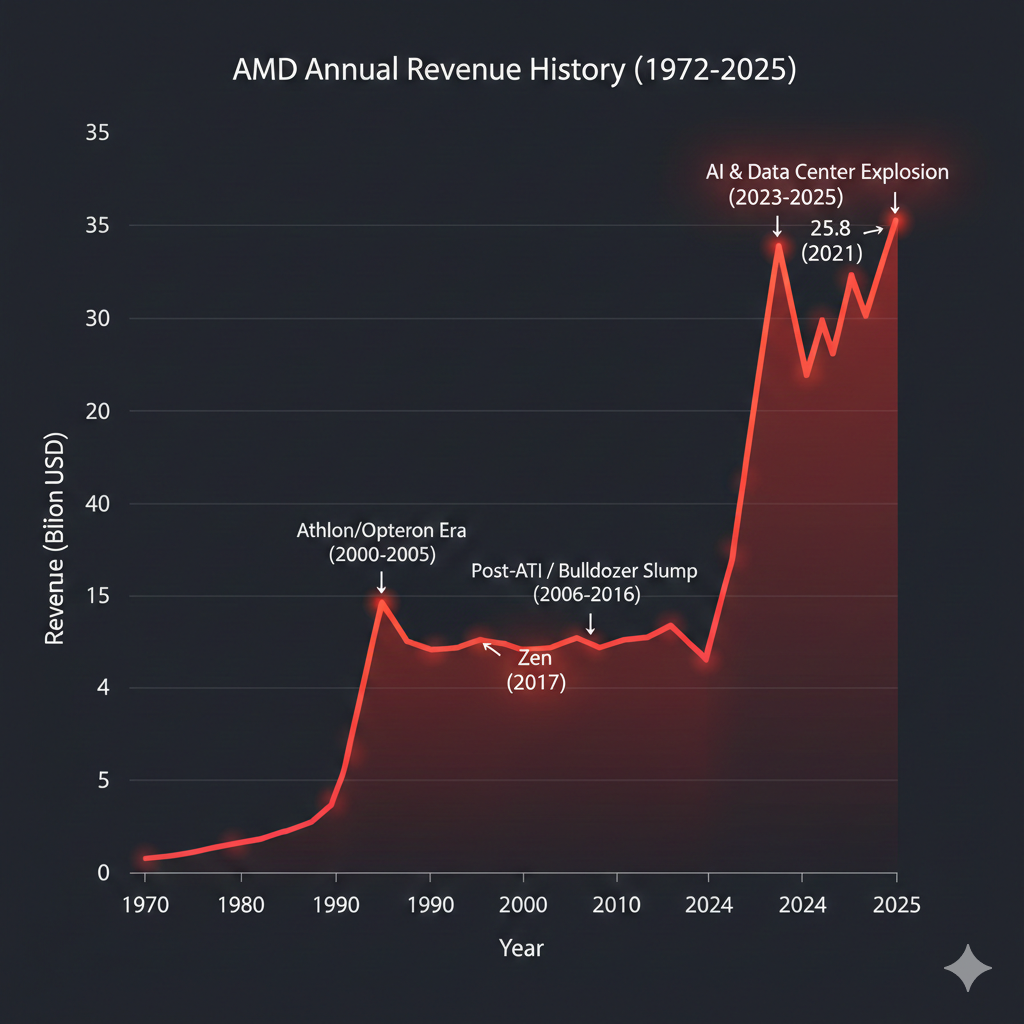

- 1971: Approx 4.6 million dollars.

- 1978: Revenue surpassed 100 million dollars.

- Late 1980s: Revenue fluctuated around 1 billion dollars.

Phase 2: Independent x86 Development and the Golden Era (1991-2005)

AMD began designing its own chips, transitioning from a follower to a legitimate performance leader.

- 1991: Released the Am386, breaking Intel’s monopoly on the 386 market.

- 1996: Acquired NexGen, which provided the architectural foundation for the K6 and K7 series.

- 2000: Launched the Athlon processor, the first CPU in history to reach the 1GHz milestone, beating Intel to the punch.

- 2003: Introduced Opteron and Athlon 64, leading the industry into the 64-bit computing era.

Core Technology: x86-64 Architecture (beating Intel to 64-bit), 1GHz Clock Speed Barrier (Athlon), integrated memory controllers, and dual-core processor technology.

Revenue Level:

- Early 1990s: Approx 1.1-1.5 billion dollars.

- 2000 (The Athlon Era): Revenue jumped to 4.6 billion dollars.

- 2005: Driven by Opteron’s success in data centers, revenue reached 5.8 billion dollars.

Phase 3: The “Bulldozer” Struggle and Financial Crisis (2006-2013)

A period marked by high-stakes acquisitions and architectural missteps that nearly bankrupted the company.

- 2006: Acquired ATI Technologies for 5.4 billion dollars to gain GPU expertise, incurring significant debt.

- 2009: Spun off its manufacturing arm to create GlobalFoundries, becoming a “fabless” semiconductor company.

- 2011: Launched the Bulldozer architecture. It failed to meet performance expectations and struggled with power efficiency, causing AMD to lose significant market share to Intel.

Core Technology: APU (Accelerated Processing Unit) concept (CPU+GPU integration) and the Bulldozer Modular Architecture (which suffered from poor single-core performance and high power consumption).

Revenue Level:

- 2006-2007: Revenue appeared to grow to 5-6 billion dollars due to the ATI merger, but net income turned sharply negative.

- 2012-2013: Revenue stagnated around 5.3 billion dollars, with market share dropping to single digits.

Phase 4: The Lisa Su Era and Zen Revolution (2014-2021)

Under the leadership of CEO Lisa Su, AMD executed one of the greatest turnarounds in corporate history.

- 2017: Released the Zen architecture (Ryzen), offering massive leaps in multi-core performance and value.

- 2019: Partnered with TSMC to launch 7nm processors, surpassing Intel in manufacturing process technology for the first time.

- 2020: Introduced Zen 3, achieving leadership in single-core performance and gaming.

Core Technology: Zen Microarchitecture, Chiplet (MCM) Design, and the aggressive adoption of 7nm/5nm advanced process nodes from TSMC.

Revenue Level:

- 2017 (Zen Launch): Revenue recovered to 5.3 billion dollars.

- 2021: Driven by remote work demand and product leadership, revenue exploded to 164.4 billion dollars with significantly improved gross margins.

Phase 5: AI Leadership and Data Center Dominance (2022-Present)

AMD is currently focused on high-performance computing (HPC) and artificial intelligence.

- 2022: Completed the 49 billion dollars acquisition of Xilinx, making AMD a leader in FPGA and adaptive computing.

- 2023-2025: Launched the Instinct MI300 and MI350 series AI accelerators to compete directly with NVIDIA in the generative AI market.

- 2026 Forecast: Set to release the MI450 series, further strengthening its position in cloud and enterprise AI.

Core Technology: CDNA 3/4 Architectures (AI-specific), 3D V-Cache, FPGA technology, and the Instinct MI300 Series (the world’s first data center APU).

Revenue Level:

- 2023: Revenue reached 22.7 billion dollars.

- 2024: Revenue grew to 25.8 billion dollars, with Data Center revenue accounting for over 50% of total sales.

- 2025 (Forecast): Revenue is expected to exceed 32 billion dollars, reflecting the massive ramp-up of AI accelerators (MI300/325).

AMD Competitive Analysis (2026 Strategy)

In 2026, AMD occupies a unique strategic position as the only company capable of competing at a high level in both the CPU (vs. Intel/ARM) and GPU (vs. NVIDIA) markets. While it has successfully eroded Intel’s dominance, it now faces the monumental challenge of breaking NVIDIA’s AI monopoly.

1. AMD vs. NVIDIA: The AI Accelerator Battle

NVIDIA remains the incumbent with roughly 90% of the AI data center market, but AMD’s Instinct MI-series is the primary challenger for cloud providers seeking a “non-NVIDIA” alternative.

- Software Ecosystem (ROCm): This is AMD’s Achilles’ heel. Throughout 2025 and 2026, AMD has aggressively invested in ROCm 7.x, partnering with Meta and Microsoft to ensure seamless migration from CUDA.

- Performance & Memory: AMD’s MI350 and MI450 series leverage HBM3e/HBM4 memory. By offering higher memory capacity and bandwidth than NVIDIA’s H200/Blackwell in certain configurations, AMD is winning “Inference” workloads where large model weights require massive on-chip memory.

- Supply Strategy: Unlike NVIDIA, which is heavily constrained by TSMC’s CoWoS capacity, AMD has diversified its advanced packaging partnerships, allowing it to offer shorter lead times to tier-two cloud providers.

2. AMD vs. Intel: The x86 Market Dominance

The narrative has shifted from “AMD as an underdog” to “AMD as the performance leader.”

- Data Center (EPYC): As of early 2026, AMD’s EPYC “Turin” (Zen 5) has captured nearly 40% of server revenue. Intel’s Xeon “Clearwater Forest” is fighting back, but AMD’s Chiplet efficiency consistently delivers a better Total Cost of Ownership (TCO).

- Consumer PC (Ryzen): Following Intel’s 13th/14th Gen stability issues in previous years, AMD has gained significant mindshare. The Ryzen AI 400 series (Strix Halo) is currently the benchmark for “AI PCs,” outperforming Intel’s Lunar Lake and Panther Lake in integrated graphics and NPU (Neural Processing Unit) tasks.

3. AMD vs. ARM & Apple: The Battle for Efficiency

With the rise of Qualcomm Snapdragon X and NVIDIA’s rumored ARM-based PC chips (N1X), the x86 architecture itself is under fire.

- The Power Challenge: ARM-based chips offer superior battery life for ultra-thin laptops. AMD is countering this with Zen 5c (dense cores), which aims to match ARM’s power efficiency without sacrificing the software compatibility of the x86 ecosystem.

- NVIDIA’s Entry: 2026 marks NVIDIA’s serious entry into the PC CPU space with an ARM-based chip. This creates a new “three-way war” in the laptop segment (AMD vs. Intel vs. NVIDIA).

2026 SWOT Analysis Summary

| Dimension | Strengths | Weaknesses |

| Technology | Mastery of Chiplets and 3D V-Cache. | Software stack (ROCm) still lacks CUDA’s 15-year maturity. |

| Market | Dominant in High-Performance Computing (HPC). | Weak presence in low-end mobile and enterprise office fleets. |

| Opportunities | Open-source AI initiatives (PyTorch/OpenAI Triton). | Expansion into FPGA (Xilinx) for edge AI. |

| Threats | NVIDIA’s vertical integration (NVLink/Networking). | ARM-based custom silicon from Google/Amazon. |

Competitive Market Share Projection (2026)

- Server CPU: AMD 38% | Intel 55% | ARM 7%

- AI Accelerators: NVIDIA 85% | AMD 10% | Others (ASICs) 5%

- Desktop CPU: AMD 45% | Intel 55%

Competitive Landscape Video: AMD vs Intel vs NVIDIA in 2026

AMD vs. NVIDIA: Technical Deep Dive (2026 Edition)

As of early 2026, the technical rivalry between AMD and NVIDIA has reached a fever pitch. While NVIDIA’s Blackwell remains the industry gold standard for large-scale training, AMD’s Instinct MI350 series (CDNA 4) has successfully closed the hardware gap, specifically targeting NVIDIA’s lead in AI inference and memory capacity.

1. Architectural Philosophy: Blackwell vs. CDNA 4

| Technical Pillar | NVIDIA Blackwell (B200/B300) | AMD Instinct (MI355X) |

| Manufacturing | TSMC 4NP (Custom 4nm) | TSMC N3P (Advanced 3nm) |

| Design Logic | Dual-die Coherent: Two dies linked by a 10TB/s interconnect, appearing as a single monolithic chip to software. | Chiplet Master: Multiple 3nm XCDs (Compute Dies) linked with I/O dies. Extreme scalability but higher power draw. |

| Transistor Count | 208 Billion | 185 Billion |

| Power (TDP) | 700W – 1200W | 1000W – 1400W (Liquid Cooled) |

- NVIDIA’s Edge: The “unified” die approach reduces latency between cores, making it superior for the most complex synchronized training tasks.

- AMD’s Edge: By using a more advanced 3nm process, AMD achieves higher transistor density and raw compute potential per square millimeter of silicon.

2. Memory & Compute: The “Inference” Advantage

AMD has pivoted to address the industry’s biggest bottleneck: Memory Bound Inference.

- VRAM Capacity: The MI355X features 288GB of HBM3e, which is 1.6x more than the standard B200 (180GB/192GB). This allows developers to fit massive models like Llama 4 (400B+) on fewer GPUs, drastically reducing the “Communication Tax” between chips.

- New Data Types (FP4/FP6): Both companies now support FP4 for ultra-fast low-precision AI. However, AMD has uniquely focused on FP6, which offers a middle ground—higher precision than FP4 for better model accuracy, while still being significantly faster than FP8.

- FP64 (HPC): AMD remains the king of scientific computing. The MI355X offers nearly 2.1x the FP64 performance of the B200, making it the preferred choice for weather simulation and nuclear physics.

3. Software: CUDA vs. ROCm 7.2

This is where the battle is won or lost.

- NVIDIA CUDA: The “walled garden.” It is incredibly mature with a massive library of pre-optimized kernels. For 90% of developers, it remains the “default” because it requires zero troubleshooting.

- AMD ROCm 7.2: In early 2026, AMD achieved a milestone by releasing ROCm 7.2 with full Windows/Linux parity and “Day 0” support for major frameworks. On mainstream models like Stable Diffusion XL and Llama 3.1, ROCm now achieves performance parity with CUDA. However, for niche or proprietary research code, CUDA still holds a slight lead in ease of deployment.

4. Direct Specification Comparison (2026 Flagships)

| Specification | NVIDIA B200 (SXM) | AMD MI355X (OAM) | Winner |

| Memory Capacity | 180GB – 192GB HBM3e | 288GB HBM3e | AMD |

| Memory Bandwidth | 8.0 TB/s | 8.0 TB/s | Tie |

| AI Performance (FP8) | 9.0 PFLOPS | 10.1 PFLOPS | AMD |

| AI Performance (FP4) | 20.0 PFLOPS | 20.0 PFLOPS | Tie |

| Interconnect Speed | 1.8 TB/s (NVLink 5.0) | 1.5 TB/s (Infinity Fabric) | NVIDIA |

| Software Stack | CUDA 12.x (Mature) | ROCm 7.x (Open Source) | NVIDIA |

Summary Recommendation

- Build an NVIDIA Cluster if: You are training a “Frontier Model” from scratch (e.g., GPT-5 class) where multi-node interconnect efficiency (NVLink) and software stability are your top priorities.

- Build an AMD Cluster if: You are focused on Inference at Scale or Fine-Tuning. The 288GB VRAM allows for much higher throughput and lower Total Cost of Ownership (TCO) for serving models to millions of users.

AMD vs. Intel: Technical Deep Dive (2026 Edition)

As of early 2026, the rivalry has shifted from a “core-count war” to a battle over advanced process nodes (18A vs. N2) and architectural efficiency in AI-driven workloads.

1. Process Node & Manufacturing

2026 marks the first time in over a decade that Intel has a legitimate chance to reclaim the “Process King” title.

- Intel (18A / 1.8nm): Intel is now in high-volume production of the 18A node. It introduces two revolutionary technologies: RibbonFET (Intel’s version of Gate-All-Around) and PowerVia (backside power delivery). Backside power delivery allows for significantly better power efficiency and higher clock speeds by moving power traces to the back of the wafer.

- AMD (TSMC 3nm / 2nm): AMD currently relies on TSMC N3P for its flagship Zen 5 products and is preparing for TSMC N2 (2nm) with the Zen 6 “Medusa” architecture later this year. While TSMC N2 is expected to have superior transistor density, Intel’s 18A aims for higher performance-per-watt through its aggressive power delivery architecture.

2. Consumer Segment: AI PC & Laptop Efficiency

The “AI PC” is the main marketing driver in 2026.

- Intel (Panther Lake – Core Ultra Series 3): Built on the 18A node, Panther Lake is a massive leap in efficiency. It features the Xe3 “Celestial” GPU architecture, which, according to recent 2026 benchmarks, can deliver up to 78% better gaming performance than previous integrated solutions. Intel’s NPU 4.0 provides ~50 TOPS, matching the industry’s “Copilot+ PC” requirements.

- AMD (Ryzen AI 400 Series): AMD counters with the Ryzen AI 400, featuring the XDNA 2 NPU that delivers up to 60 TOPS—currently the highest in the consumer segment. AMD’s advantage lies in its Strix Halo designs, which integrate massive GPUs with performance comparable to dedicated mid-range graphics cards (like an RTX 4060m), making them the choice for portable workstations.

3. Data Center: EPYC “Venice” vs. Xeon “Clearwater Forest”

AMD dominates in core density, while Intel is pivoting toward specialized efficiency cores.

- AMD EPYC (Zen 6 “Venice”): Utilizing the “Chiplet” mastery, AMD has pushed core counts to 192 cores using Zen 6c. AMD’s primary technical edge is Memory Bandwidth; with 12-channel DDR5 support, EPYC continues to lead in data-heavy workloads like SQL databases and large-scale virtualization.

- Intel Xeon (Clearwater Forest): This is Intel’s first major data center chip on the 18A node. It features up to 288 “Darkmont” E-cores. Intel’s strategy is focused on Compute Density and Advanced Matrix Extensions (AMX), allowing the CPU to handle AI inference tasks that would normally require a dedicated GPU.

Technical Specification Comparison (2026 Benchmarks)

| Feature | Intel (Panther Lake / 18A) | AMD (Ryzen AI 400 / N3P-N2) | Winner |

| Transistor Type | RibbonFET (GAA) | FinFET (Zen 5) / GAA (Zen 6) | Intel (Early Lead) |

| Power Delivery | PowerVia (Backside) | Traditional Frontside | Intel |

| AI NPU Performance | 50 TOPS | 60 TOPS | AMD |

| Integrated Graphics | Xe3 Celestial (Strong Ray Tracing) | RDNA 3.5+ (High Raw FPS) | Tie |

| Max Server Cores | 288 (E-cores) | 192 (High-Perf Zen 6c) | AMD (Performance/Core) |

Summary Recommendation

- Go with Intel (2026) for Ultrabooks and Efficiency. The 18A node and PowerVia technology have significantly improved battery life and single-threaded performance, making Intel the leader in “Premium Thin & Light” laptops.

- Go with AMD (2026) for Multi-threaded Productivity and Data Centers. AMD’s chiplet architecture and superior NPU TOPS make it the better choice for content creation, heavy multitasking, and high-density server environments.

AMD vs. ARM: Technical Deep Dive (2026 Edition)

In 2026, the boundary between AMD (x86) and ARM has blurred. AMD is no longer just a “high-power” player; it has effectively matched ARM’s efficiency in many areas. Meanwhile, ARM has matured from a mobile-first architecture into a formidable data center and workstation powerhouse.

1. Architectural Philosophy: CISC vs. RISC

This remains the fundamental technical divide, though modern decoders have narrowed the functional gap.

- AMD (x86-64 / Zen 6 “Medusa”):

- Logic: Uses Complex Instruction Set Computing (CISC). Modern Zen 6 cores use advanced “uOp” (micro-operation) caching to minimize the power-heavy decoding process.

- 2026 Edge: Seamless Legacy Support. AMD’s x86 architecture runs decades of enterprise software natively. In 2026, AMD and Intel formed the x86 Advisory Group to further streamline the instruction set, removing legacy “bloat” to improve efficiency.

- ARM (ARMv9.3 / Neoverse V3/N3):

- Logic: Uses Reduced Instruction Set Computing (RISC). Fixed-length instructions allow for simpler, more power-efficient decoders.

- 2026 Edge: Customization. ARM licenses its IP, allowing giants like AWS (Graviton 5) or Microsoft to build “bespoke” silicon. These chips often include custom hardware accelerators for specific cloud tasks that a generic AMD chip cannot match.

2. Efficiency: The Gap is Closing

- ARM’s Territory: ARM still holds a slight lead in Idle Power Consumption. In 2026, ARM-based laptops (like the Snapdragon X3) consistently achieve 22-25 hours of battery life.

- AMD’s Counter: With the Ryzen AI 400 series built on TSMC 3nm/2nm, AMD has achieved “Efficiency Parity” in active workloads. In heavy multitasking or video conferencing (e.g., Teams/Zoom), AMD’s Zen 5/6 cores often consume less total energy than ARM because they complete the task faster (“Race to Sleep” strategy).

3. Data Center & Cloud Performance

- AMD EPYC (Zen 6 “Venice”): Focuses on Compute Density. AMD can pack up to 192 full-performance cores in a single socket. Its technical advantage is I/O and Memory Throughput, consistently outperforming ARM in heavy database and virtualization workloads.

- ARM (Neoverse V3): Focuses on Total Cost of Ownership (TCO). ARM chips like the NVIDIA Grace or Google Axion provide better “Performance-per-Dollar” for cloud-native microservices (web servers, containerized apps) where single-core peak performance is less critical than aggregate throughput across thousands of cores.

4. Technical Comparison Table (2026)

| Feature | AMD (x86 Zen 6) | ARM (Neoverse / Cortex) | Winner |

| Instruction Set | CISC (Complex) | RISC (Simple) | ARM (Efficiency) |

| Manufacturing | TSMC 3nm / 2nm | TSMC 3nm / 2nm | Tie |

| Software Fit | Universal / Legacy | Cloud-Native / Mobile | AMD (Compatibility) |

| AI Integration | NPU-heavy (60+ TOPS) | Scalable Vector (SVE2) | AMD (Edge AI) |

| Max Core Count | 192 (Zen 6c) | 256+ (Custom SoC) | ARM (Density) |

| Battery Life | 18 – 20 Hours | 22 – 25 Hours | ARM |

5. The “Plot Twist” of 2026: AMD Sound Wave

The biggest technical news of 2026 is that AMD is now an ARM player too.

- To compete for the Microsoft Surface and ultra-mobile market, AMD launched the “Sound Wave” APU, which uses ARM CPU cores paired with AMD Radeon Graphics.

- This proves that AMD’s core strength is moving toward Heterogeneous Computing (mixing the best IP), rather than being strictly tied to the x86 instruction set.

More: